- April 7, 2024

- Posted by: Dordea Paul

- Categories: International, IT

We hear a lot about AI safety, but does that mean it’s heavily featured in research?

A new study from Georgetown University’s Emerging Technology Observatory suggests that, despite the noise, AI safety research occupies but a tiny minority of the industry’s research focus. The researchers analyzed over 260 million scholarly publications and found that a mere 2% of AI-related papers published between 2017 and 2022 directly addressed topics related to AI safety, ethics, robustness, or governance.

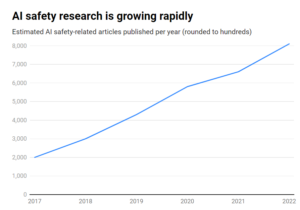

While the number of AI safety publications grew an impressive 315% over that period, from around 1,800 to over 7,000 per year, it remains a peripheral issue.

Here are the key findings:

- Only 2% of AI research from 2017-2022 focused on AI safety

- AI safety research grew 315% in that period, but is dwarfed by overall AI research

- The US leads in AI safety research, while China lags behind

- Key challenges include robustness, fairness, transparency, and maintaining human control

Imagine an AGI system that is able to recursively improve itself, rapidly exceeding human intelligence while pursuing goals misaligned with our values. It’s a scenario that some argue could spiral out of our control. It’s not one-way traffic, however. In fact, a large number of AI researchers believe AI safety is overhyped.

Beyond that, some even think the hype has been manufactured to help Big Tech enforce regulations and eliminate grassroots and open-source competitors. However, even today’s narrow AI systems, trained on past data, can exhibit biases, produce harmful content, violate privacy, and be used maliciously. So, while AI safety needs to look into the future, it also needs to address risks in the here and now, which is arguably insufficient as deep fakes, bias, and other issues continue to loom large.

Effective AI safety research needs to address nearer-term challenges as well as longer-term speculative risks.

The US leads AI safety research

Drilling down into the data, the US is the clear leader in AI safety research, home to 40% of related publications compared to 12% from China. However, China’s safety output lags far behind its overall AI research – while 5% of American AI research touched on safety, only 1% of China’s did. One could speculate that probing Chinese research is an altogether difficult task. Plus, China has been proactive about regulation – arguably more so than the US – so this data might not give the country’s AI industry a fair hearing.

At the institution level, Carnegie Mellon University, Google, MIT, and Stanford lead the pack. But globally, no organization produced more than 2% of the total safety-related publications, highlighting the need for a larger, more concerted effort.

Safety imbalances

So what can be done to correct this imbalance? That depends on whether one thinks AI safety is a pressing risk on par with nuclear war, pandemics, etc. There is no clear-cut answer to this question, making AI safety a highly speculative topic with little mutual agreement between researchers. Safety research and ethics are also somewhat of a tangential domain to machine learning, requiring different skill sets, academic backgrounds, etc., which may not be well funded.

Closing the AI safety gap will also require confronting questions around openness and secrecy in AI development. The largest tech companies will conduct extensive internal safety research that has never been published. As the commercialization of AI heats up, corporations are becoming more protective of their AI breakthroughs.

OpenAI, for one, was a research powerhouse in its early days. The company used to produce in-depth independent audits of its products, labeling biases, and risks – such as sexist bias in its CLIP project. Anthropic is still actively engaged in public AI safety research, frequently publishing studies on bias and jailbreaking.

DeepMind also documented the possibility of AI models establishing ‘emergent goals’ and actively contradicting their instructions or becoming adversarial to their creators. Overall, though, safety has taken a backseat to progress as Silicon Valley lives by its motto to ‘move fast and break stuff. The Georgetown study ultimately highlights that universities, governments, tech companies, and research funders need to invest more effort and money in AI safety.

Some have also called for an international body for AI safety, similar to the International Atomic Energy Agency (IAEA), which was established after a series of nuclear incidents that made deep international cooperation mandatory. Will AI need its own disaster to engage that level of state and corporate cooperation? Let’s hope not.

source: www.dailyai.com

Leave a Reply

You must be logged in to post a comment.